Google Gemini 3.0: Next-Gen AI Model Release & Features

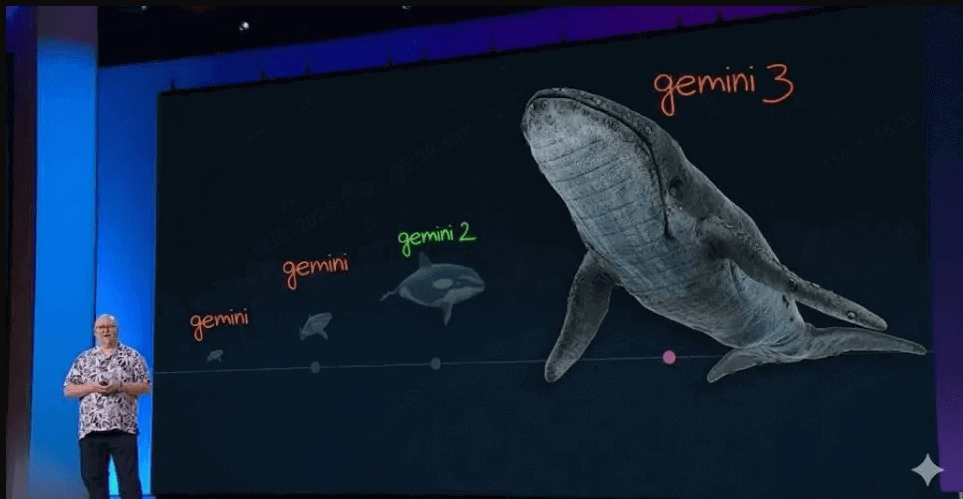

Google has officially launched Gemini 3.0 on November 19, 2025, marking the next major milestone in its Gemini AI series.

Building on Gemini 1.5 and 2.5, Gemini 3.0 introduces advanced multimodal understanding, ultra-long context processing, and seamless integration across Google services. It blends text, audio, video, and spatial reasoning into a unified AI agent, redefining human-computer interaction.

In this article:

What Is Gemini 3.0?

Gemini 3.0 is Google's third-generation multimodal AI model built by DeepMind. It's designed to combine advanced reasoning, tool orchestration, and ultra-long context capabilities with improved efficiency.

Unlike traditional chatbots, Gemini 3.0 is expected to function as an intelligent "AI agent," capable of analyzing multiple input types—text, image, video, audio, and possibly even 3D scenes—at once.

ChatArt - The best AI chat, AI writing, and marketing assistant

5,323,556 users have tried it for free

- Instantly switch between different functions and AI models for different tasks: DeepSeek, GPT-4.1, GPT-4o, Claude Sonnet 4, Gemini 2.0 Pro, etc.

- Easy-to-use Math Solver: Take a picture and upload it to ChatArt math solver for the answer and steps.

- Over 100 writing templates are available, supporting text export in multiple languages.

- ChatArt allows users to generate high-quality AI videos from text prompts quickly and easily.

Key Features and Capabilities

Million-Token Context Window

Gemini 3 Pro supports a context window of up to 1 million tokens, compared to around 200,000 tokens in standard versions. This enormous memory capacity enables the model to analyze entire codebases, lengthy documents, or research datasets in one go.

Practical impact:

Enhanced long-form document understanding

Precise multi-step reasoning

Smarter enterprise workflow automation

Full-Modality Reasoning

Gemini 3 Pro integrates five modalities—text, image, code, audio, and video—allowing seamless interaction across them. It can interpret a AI chat, explain the underlying data, generate related code, and even produce an explanatory video clip.

Unlike prior models that only "describe" images, Gemini 3 Pro can infer causal relationships, logical structures, and contextual meaning within visual or audiovisual inputs.

Deep Reasoning and Task Planning

Gemini 3 Pro introduces true reasoning and planning capabilities. It can maintain multi-stage logic, decompose complex tasks, make informed decisions, and dynamically call APIs or external tools during inference.

This architecture enables Gemini 3 Pro to power next-generation AI agents—such as automated analysts, intelligent customer support, or multi-step business workflows.

1.2 Trillion-Parameter Architecture

While Google has not officially confirmed the exact scale, developer communities estimate that Gemini 3 Pro features approximately 1.2 trillion parameters. The training data has been updated through August 2024 and includes text, images, videos, multilingual data, and code, making Gemini 3 Pro one of the most complete and balanced models ever built.

Native Multilingual Mastery

Gemini 3.0 will provide natural fluency across more than 200 languages. Early test results suggest significant improvements in translation consistency, context preservation, and emotion detection compared with Gemini 1.5 Pro. This positions it as a global-scale model optimized for real-time multilingual communication.

Enhanced AI Safety and Alignment

Google emphasizes trust, transparency, and responsible AI in Gemini 3's development. The new version integrates multi-layer alignment checks, ethical reasoning, and human-in-the-loop supervision to reduce hallucinations and ensure factual reliability—especially in professional and enterprise use.

Real-World Examples of Gemini 3.0 in Action

Cross-Media Marketing Content Creation: Gemini 3.0 can generate complete marketing campaigns from a single text prompt:

Draft ad copy for social media

Create promotional images

Generate video ads with voiceover narration

Massive Codebase Analysis and Debugging: Developers can leverage Gemini 3.0’s million-token context window to analyze huge software repositories:

Detect bugs or vulnerabilities across millions of lines of code

Suggest optimized algorithms or refactoring strategies

Auto-generate test cases or scripts

Scientific Research Assistant: Researchers can input datasets, research papers, charts, and experiment videos, and Gemini 3.0 can:

Summarize key findings

Highlight causal relationships in data

Generate visual or video explanations

Multimodal Search and Discovery: Users can input images, video clips, or audio recordings, and Gemini 3.0 can:

Identify objects, events, or topics

Generate text summaries, video highlights, or related multimedia content

Merge media types into a single actionable output

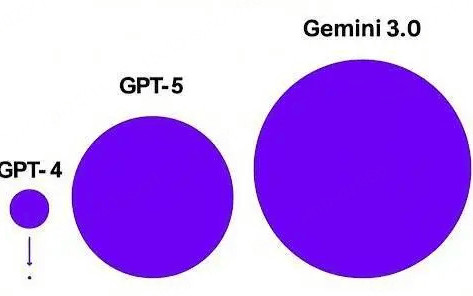

Gemini 3.0 vs Gemini 2.5 and GPT-5

| Model | Developer | Core Focus | Unique Advantage |

|---|---|---|---|

| Gemini 2.5 | Text + Image understanding | Balanced multimodality | |

| Gemini 3.0 | Google DeepMind | Full multimodal (video, 3D, audio) + reasoning | Cross-platform agent integration |

| GPT-5 | OpenAI | General-purpose reasoning | Large ecosystem (ChatGPT + API) |

| Claude 4.5 | Anthropic | Context and safety | Constitutional AI and large memory |

Gemini 3.0 aims to compete directly with GPT-5 by offering more context awareness and multimodal intelligence, while maintaining Google-grade reliability and speed.

When will Google release Gemini 3.0?

Gemini 3.0 was officially released on November 19, 2025, with immediate developer access and public availability rolling out via Google Cloud Vertex AI and integrated applications.

Why Gemini 3.0 Matters

For Developers

Access to a powerful multimodal API that understands voice, video, and visual cues.

Ability to build cross-media applications—like AI-driven video editors, analytics dashboards, and real-time assistants.

Seamless deployment via Google Cloud Vertex AI.

For Businesses

Smarter AI assistants across customer support, analytics, and marketing.

Easier automation through Gemini 3.0 integrations with Google Workspace and enterprise tools.

Reduced operational costs with more efficient AI inference.

For Consumers

Smarter Search and Gmail experiences with contextual understanding.

More creative freedom through AI-powered video, audio, and image generation.

Personalized recommendations powered by deeper context learning.

How to Prepare for Gemini 3.0

Subscribe for developer updates on Google Cloud Vertex AI.

Explore Gemini 2.5 or Gemini Advanced to understand current capabilities.

Audit your workflows for tasks that could benefit from multimodal AI.

Train teams to use AI APIs, as Gemini 3.0 will emphasize tool orchestration.

Conclusion

Gemini 3.0 represents Google's boldest step toward unified, multimodal AI. With its ability to process text, images, audio, and video in real time, it's poised to reshape how we work, create, and communicate.

As the official launch nears, developers and businesses should prepare to leverage Gemini's advanced APIs and integration potential. Whether you're an enterprise innovator or an AI enthusiast, Gemini 3.0 will be a defining milestone in the evolution of intelligent systems.

How to Create Video with Images Using Free AI Tools

5 Best AI Glamour Video Generators That Push Creative Boundaries

8 Best AI Boyfriend in 2026 : Free, Online & No Sign-Up