Seedance 1.5 Pro Review: A/V Sync Mastery vs. 1.0 Stability & Our 2.0 Predictions

We've all used AI video tools. The results were often impressive... if you ignored the shaky camera, the awkward character glitches, and the utterly mismatched sound. For professionals, this meant AI was a novelty, not a tool.

That changes now.

The Seedance family of models is solving the two biggest production headaches: stability and synchronization.

This hands-on review cuts straight to the proof. We put the foundational Seedance 1.0 and the advanced Seedance 1.5 Pro through a series of practical stress tests.

Contents:

Seedance 1.0: The Bedrock of Visual Stability

The initial excitement around early AI video often faded when creators attempted to integrate the clips into actual projects. While early models could generate striking still-frame compositions, the resulting videos frequently suffered from a lack of temporal consistency: objects flickering, textures dissolving, and, most critically, unstable camera motion.

Seedance 1.0 didn't just generate video; it solved the foundational problem of visual consistency. Its enduring value—the "long-tail" benefit that will never become outdated—lies in its mastery of two core principles: Motion Plausibility and Multi-shot Coherence. These are not feature add-ons; they are the essential physics and grammar of cinematic AI.

Core Strength: Establishing Cinematic Grammar

- Motion Plausibility: 1.0 ensures that movements within the scene are smooth, continuous, and adhere to a believable, albeit generated, physics. It stabilized the AI's "hand."

- Multi-shot Coherence: For the first time, creators could generate successive shots (e.g., a wide shot followed by a close-up) where the character, setting, and even the lighting maintained a high degree of fidelity and consistency.

Practical Stress Test: The Motion Stability Challenge

To truly assess 1.0’s core competency, we designed a prompt that actively challenges the model's ability to maintain focus, track movement, and keep the camera work stable under pressure.

The Test Prompt:

The Test Prompt:"A character dressed in tactical gear is running quickly across a muddy, uneven terrain. They suddenly perform a high leap to clear a fallen log obstacle. The camera maintains a smooth, tracking shot from a low angle, following the character's movement precisely."

Seedance 1.0 demonstrated a clear capability to execute complex directorial instructions—not just what the scene contains, but how the camera should move. This stability is the necessary precondition for any advanced AI video work. While 1.0 lacks the audio capabilities of its successor, its contribution to foundational visual stability makes it an indispensable benchmark.

Creator's Toolkit: Maximizing Your Testing Efficiency

Testing the nuances between Seedance 1.0 and 1.5 Pro requires quick, simultaneous access to both models. Historically, this meant juggling multiple platform subscriptions and dealing with disparate credit systems.

You can streamline this entire workflow using ChatArt. ChatArt integrates the full Seedance model suite (including V1.0, 1.5 Pro, and many other leading models like Sora 2 and Nano Banana Pro) under a single, simplified subscription. This means you can run your stability tests, compare generation speeds, and instantly pivot between models without ever leaving the platform. Experience the full Seedance evolution without the subscription headache.

Now that we have established 1.0’s visual bedrock, let’s move on to the revolution brought by its successor.

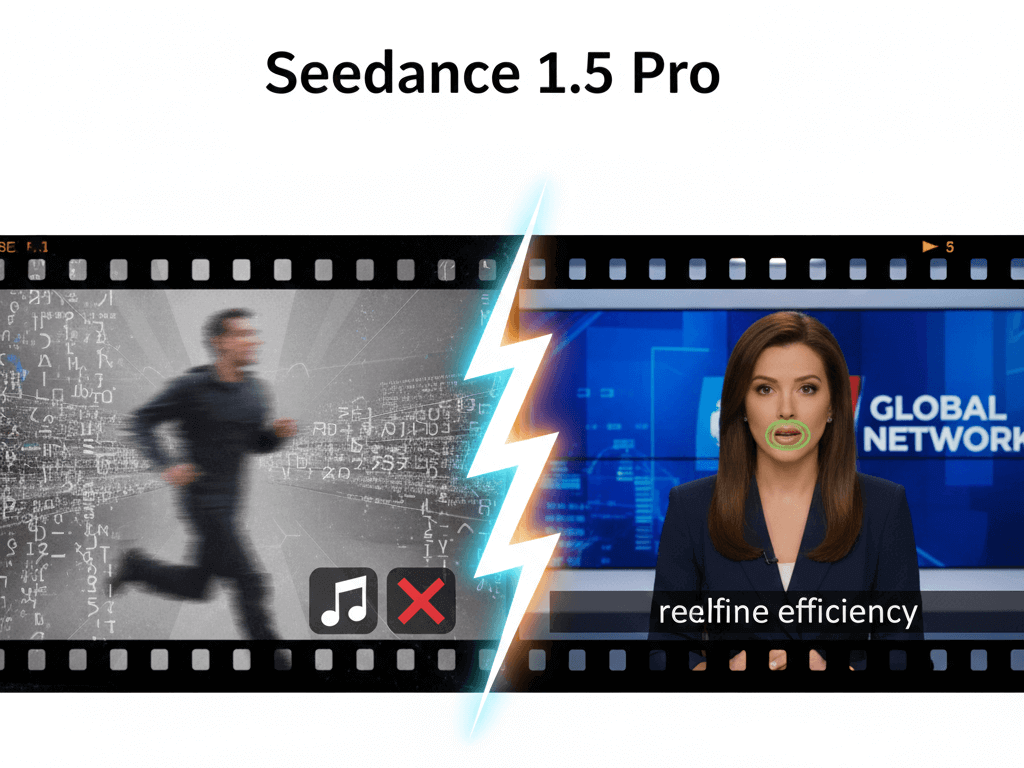

Seedance 1.5 Pro – The Revolution in A/V Synchronization

While Seedance 1.0 laid the groundwork with solid motion stability, Seedance 1.5 Pro delivers the feature that truly moves AI video into the professional production studio: Native Audio-Visual Joint Generation.

This is not merely stitching a video clip to an audio track; 1.5 Pro is trained to generate the visual and auditory elements simultaneously, ensuring they are inextricably linked. This solves the long-standing, career-breaking issue of out-of-sync audio and visuals (A/V sync).

Let’s put this professional-grade claim to the test.

The goal: To test 1.5 Pro’s ability to adapt a character's mouth movements (phonemes) to different spoken languages—a common roadblock in localization.

The Prompt Test

The Prompt TestWe tasked the model with generating a virtual spokesperson delivering the same message across two distinct languages:

English: "This new technology will redefine efficiency."

European Spanish: "Esta nueva tecnología redefinirá la eficiencia."

The model successfully adapted the lip movements to the unique sounds of each language. Unlike older models where mouth shapes often look generic or misaligned (a dead giveaway of cheap AI), 1.5 Pro delivered dialogue that looked natural and intentionally synchronized to the specific phonemes of the language being spoken. This capability drastically cuts down on post-production localization time.The model successfully adapted the lip movements to the unique sounds of each language. Unlike older models where mouth shapes often look generic or misaligned (a dead giveaway of cheap AI), 1.5 Pro delivered dialogue that looked natural and intentionally synchronized to the specific phonemes of the language being spoken. This capability drastically cuts down on post-production localization time.

For true immersion, a scene's sound effects (Foley) must be perfectly timed with the physical action. A millisecond of delay ruins the effect. We tested the model's micro-timing precision with a high-impact, immediate action:

The Prompt Test

The Prompt Test"A person, frustrated, slams a heavy, ornate glass onto a thick wooden tabletop."

Seedance 1.5 Pro demonstrated remarkable fidelity. The peak of the generated sound effect (the sharp 'thud' of the glass hitting the wood) aligned exactly with the moment of visual contact in the video frame. This proves the model generates the sound and the action as one integrated element, eliminating the manual A/V correction typically required in post-production.

Looking Ahead – The Promise and Predictions for Seedance 2.0

While Seedance 1.5 Pro marks a monumental leap in professional A/V synchronization, no AI model is perfect. Addressing current limitations is crucial for understanding the trajectory of the next major release. The anticipation for Seedance 2.0 is high because it is expected to tackle the issues that still prevent AI from handling true feature-length production.

Acknowledging the Current Limitations of 1.5 Pro

Despite its excellence in synchronization, our extensive testing of 1.5 Pro reveals a few sticking points common to current AI video technology:

Shortcoming of Seedance 1.5

- Temporal Length: Video length remains constrained, typically maxing out around 5-12 seconds. Generating a minute-long, coherent scene requires tedious stitching.

- Semantic Inaccuracies (Information Gap): The model occasionally misses key details, leading to uncanny valley issues with human subjects and frequent errors in background text, signs, or logos, confirming a gap in its comprehensive world understanding.

The Seedance 2.0 Breakthrough: Beyond the Short Clip

Based on these gaps and the established trend toward professionalization, we predict Seedance 2.0 will focus on solving three massive, persistent challenges—breakthroughs that will define the future of AI cinematography for years to come:

1. Extended Coherence (The Feature Film Horizon)

The Challenge: Current video length is constrained (5-12 seconds), breaking narrative flow.

The Prediction: 2.0 will support multi-minute narrative generation (60-180 seconds). It will maintain character identity, lighting continuity, and plot coherence over the entire scene, fundamentally shifting from generating a clip to generating a complete scene.

2. Advanced Physicality and Causality

The Challenge: Models lack sophisticated real-world physics and causal understanding.

The Prediction: 2.0 will integrate a stronger physics engine. It will understand causality—generating proper effects like burn marks from fire or erosion from water flow. This makes environment-based VFX far more realistic and reliable.

3. Real-Time / Low-Latency Generation

The Challenge: Generation time (tens of seconds) is too slow for high-volume or interactive use.

The Prediction: 2.0 will support multi-minute narrative generation (60-180 seconds). It will maintain character identity, lighting continuity, and plot coherence over the entire scene, fundamentally shifting from generating a clip to generating a complete scene.

Future-Proofing Your AI Investment

As we look toward the potential breakthroughs of Seedance 2.0, the need for a comprehensive, cost-effective toolkit becomes even greater. Why commit to one ecosystem when you can access the best of all worlds?

ChatArt currently integrates over 100 industry-leading AI models, making it the ultimate sandbox for creativity. Don't pay exorbitant fees for multiple subscriptions—access Seedance V1, 1.5 Pro, and prepare for 2.0 (and every other major new model) under one affordable plan. Secure your future AI toolkit now and enjoy massive savings.

FAQ

If Seedance 1.5 Pro is more advanced, why would I still use Seedance 1.0?

Seedance 1.0 remains valuable when you need fast, inexpensive, and visually stable clips without dialogue. It works especially well for background footage, abstract visuals, and projects where sound will be added later. For bulk production with limited budgets, it is still a smart choice.

What problem does Native Audio Visual Joint Generation actually solve?

Audio Visual Joint Generation in Seedance 1.5 Pro closes the timing gap between visuals and sound. It aligns lip movements, dialogue, sound effects, and on-screen actions with precise timing. This significantly reduces manual audio fixes in post production.

Why does Seedance sometimes struggle with faces and background text, and what will change in the future?

Issues such as slightly unnatural faces or incorrect text usually come from limited semantic understanding. Seedance 2.0 is expected to use a richer world model with deeper object and human recognition. This should improve realism and accuracy across people, objects, and written elements in scenes.

Why discuss Seedance 2.0 and its potential breakthrough in physical causality?

Better causality means the system understands how actions change environments. For example, heat leaves burn marks and water leaves erosion. This matters because creators can produce complex scenes and visual effects inside the model instead of relying on traditional 3D tools and heavy compositing, while achieving more believable storytelling.

If I want the newest Seedance capabilities, what should I pay attention to?

Look for platforms that provide direct, immediate access to official model updates, such as ChatArt. Access to the latest versions ensures more stable results, better synchronization, and faster workflows for professional production.

Final Thoughts

To ensure you can flexibly use the right Seedance model for the job—whether it’s the cost-efficiency of 1.0 or the professional fidelity of 1.5 Pro—and to ensure you are ready the moment 2.0 drops, we strongly recommend a consolidated platform.

Don't subscribe to multiple services. Access the entire Seedance evolution, plus over 100 other cutting-edge AI models, through one affordable membership with ChatArt.

Best AI Movie Review Generator | Professional Film Review Writer

Turn Any Photo Into Fun Twerking Videos with AI

5 Best AI Sexy Video Generators That Push Creative Boundaries

OpenAI series (including GPT-5.2)

OpenAI series (including GPT-5.2)

Claude series (including Claude Sonnet 4.5 & 4)

Claude series (including Claude Sonnet 4.5 & 4)

Gemini Series (including Gemini 3.0 Pro)

Gemini Series (including Gemini 3.0 Pro)

DeepSeek Series (including DeepSeek R1)

DeepSeek Series (including DeepSeek R1)