Seedance 2.0 Review: Multi-Modal AI Video Editing Made Easy ( 2026 )

Video AI is evolving at lightning speed. Sora, Kling, and Seedance rotate at the top of charts, but the real game-changer isn’t the model—it’s the creators who transform these tools into products. ByteDance’s advantage? Not raw power, but proximity to users. Before going global, they perfect the single point. While competitors settle for “good enough,” ByteDance is chasing “wow.”

With Seedance 2.0, that “wow” has arrived. Beta testers report a shock to the system—a sudden clarity where everything finally clicks. This isn’t just another update; it’s a “Three-Body Moment” in AI video, folding human creativity into a higher-dimensional playfield.

In this article, you will learn:

Seedance 2.0 Core Breakthroughs

Every update in 2.0 is aimed at solving the real pain points creators face. The results are nothing short of astonishing:

Four Breakthroughs that Redefine AI Video

- Perfect Character Consistency: Characters maintain identity and facial details across scenes—no more “AI face morphing.”

- Hollywood-Level Physics Simulation: Actions are smooth, environmental interactions realistic, fight scenes cinematic.

- Efficiency Boost: HD video renders in 2–5 seconds. Single creators can now run a “full production crew.” One person can now execute storyboarding, shooting, post-production, and audio entirely solo.

- Multi-Modal Input: Accepts images, video, and audio references to lock movement, rhythm, and even sync visuals to sound.

4 Real-World Test Cases: Director-Level Experience

Seedance 2.0 doesn’t just promise cinematic quality—it delivers it across a variety of creative scenarios. Here are four practical tests demonstrating its capabilities:

Prompt:

Generate a 15-second MV video. Keywords: stable composition / gentle push-pull camera / low-angle heroic feel / documentary yet high-end. A super wide-angle establishes the shot, slight low-angle tilt; cliff dirt road and a vintage travel car occupy the lower third, distant sea and horizon expand the space, sunset side backlight with volumetric light through dust particles, cinematic composition, realistic film grain, clothes fluttering in the breeze.

Prompt:

Camera follows a man in black running for his life, chased by a crowd. The shot switches to side tracking as he knocks over a roadside fruit stand, scrambles up, and keeps fleeing. Background: chaotic crowd sounds.

Want to see Seedance 2.0 go absolutely crazy? This YouTube test turns LeBron vs Godzilla into a full blown AI action movie.

Prompt:

@image1 as character reference, @video1 for action and expression changes, depict abstract behavior of eating instant noodles.

Prompt:

@image1 as first frame, zoom out to show the view outside the airplane window, clouds drifting slowly, one cloud dotted with colorful candy beans remains centered, then gradually morphs into @image2 ice cream. Camera pulls back into the cabin; @image3 sitting by the window reaches out to grab the ice cream, takes a bite, mouth covered with cream, face glowing with sweet joy. Video is synced to @video1 audio.

Together, these four tests show how far Seedance 2.0 has pushed AI video forward. Motion is smoother, scenes are more stable, and results feel cinematic rather than synthetic. What once took dozens of retries now works in a single pass.

More importantly, Seedance 2.0 enables a true multi-modal workflow, combining images, video, and audio into one seamless creative process. This delivers consistent characters, precise camera control, realistic effects, stronger narrative flow, clean audio, reliable beat sync, and longer coherent scenes.

In short, Seedance 2.0 moves AI video from experimentation to professional-grade production.

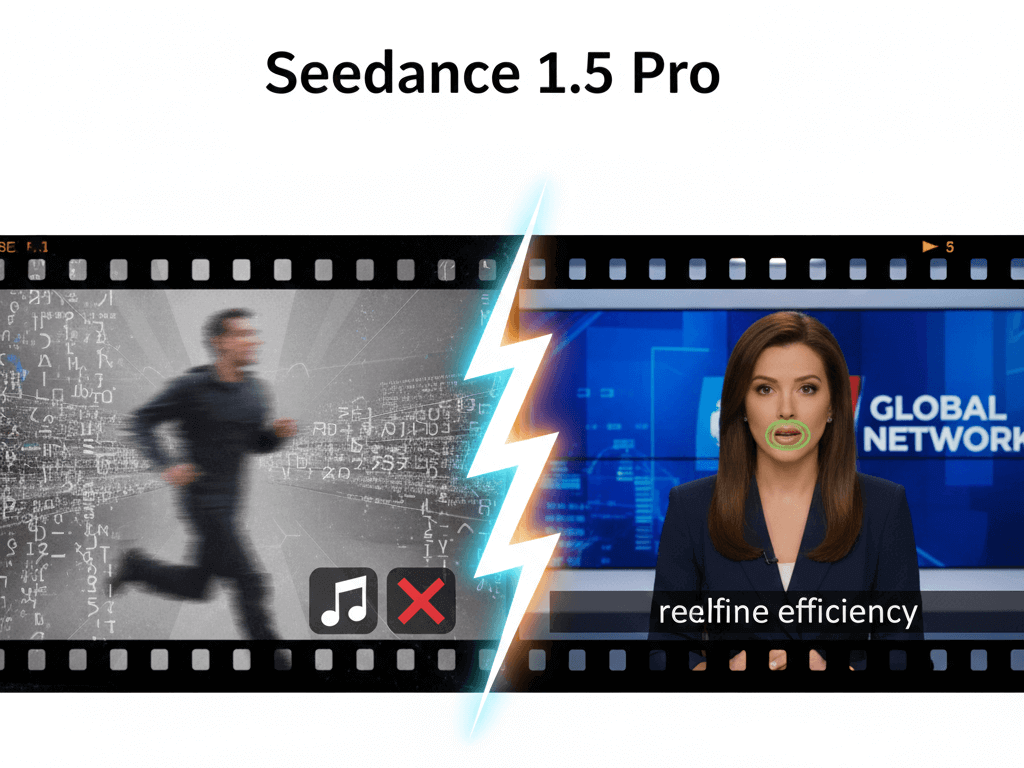

Seedance 2.0 vs. Seedance 1.5 Pro: What Actually Improved?

| Feature | Seedance 1.5 Pro | Seedance 2.0 |

|---|---|---|

| Character | Stable in short clips | Consistent across scenes, no face drift |

| Motion & Physics | Smooth, limited interaction | Realistic physics, collisions, gravity |

| Audio-Visual Sync | Accurate lip sync & timing | Multi-modal control, emotion-aware |

| Speed | 5–7s per HD clip | 2–5s per HD clip |

| Reference | Single image/prompt | Multiple references, styles & storyboard |

| Scene Coherence | Short clips only | Multi-shot sequences, consistent flow |

| Workflow | Needs post-editing | Production-ready in one go |

| Accessibility | Semi-pro learning curve | Beginner-friendly, solo studio |

How to Create Director-Level AI Videos with Seedance 2.0 (3 Steps)

Seedance 2.0 is currently in limited beta. You can try it via:

Jimeng official platform: https://jimeng.jianying.com/

API access (easiest option): Seedance 2.0 is already integrated into ChatArt, where you can try it for free—along with other AI tools for writing, novels, images, video, music, and continuously updated models, all in one platform.

iMyFone ChatArt

From storyboard to final cut—AI video made simple

Don’t rely on text alone. Combine references for full control:

Image → defines visual style and character look

Video → controls motion and camera movement

Audio → sets rhythm, emotion, and timing

Start simple: Image + audio for instant synced scenes

Level up: Extend or edit existing videos (swap characters, add actions)

Go pro: Generate full sequences from storyboard frames

Looking Ahead: The Future of AI Video with Seedance

Seedance 2.0 has solved most core AI video challenges, but creative demands keep evolving. Upcoming possibilities include:

Extended Narrative Coherence – Generate 60–180 second continuous scenes, keeping character, lighting, and plot consistency.

Advanced Physicality & Causality – Realistic environmental interactions and physics-based effects for truly immersive scenes.

Real-Time & Low-Latency Generation – Faster outputs, enabling interactive storytelling and large-scale production.

Together, these developments point to a future where AI video breaks traditional team limits, allowing creators to produce director-level content anytime, anywhere.

Frequently Asked Questions (FAQ)

1 Do I need professional equipment or a team to use it?

No. Seedance 2.0 is designed for solo creators. You can produce high-quality videos using just images, video clips, and audio references—no cameras, actors, or editing software required.

2What kinds of inputs are supported?

Seedance 2.0 supports multi-modal inputs: images for style, video clips for motion, and audio for rhythm and emotion. Up to 12 files can be mixed in a single generation.

3Can I extend or edit existing videos?

Yes. You can use the video extension feature to swap characters, add or remove content, or generate continuous multi-shot sequences—all while maintaining consistency.

4How can I access Seedance 2.0?

The easiest way is through ChatArt, where you can try Seedance 2.0 for free, alongside other AI tools for writing, novels, images, video, music, and continuously updated models. Beta access is also available via the official Jimeng platform or API for developers.

Conclusion

Seedance 2.0 marks a new era in AI video creation—bringing director-level control, multi-modal precision, and rapid generation to solo creators. With its advanced features and future-ready capabilities, it empowers anyone to produce cinematic-quality content without traditional constraints.

Seedance 1.5 Pro Review: A/V Sync Mastery vs. 1.0 Stability & Our 2.0 Predictions

4 Best Game ID Name Generators to Create Fun IDs Instantly

Spotify Alternatives: 10 Best Free Music Apps Without Ads ( 2026 Guide)

OpenAI series (including GPT-5.2)

OpenAI series (including GPT-5.2)

Claude series (including Claude Sonnet 4.5 & 4)

Claude series (including Claude Sonnet 4.5 & 4)

Gemini Series (including Gemini 3.0 Pro)

Gemini Series (including Gemini 3.0 Pro)

DeepSeek Series (including DeepSeek R1)

DeepSeek Series (including DeepSeek R1)